Testing and Experimentation

Testing and experimentation are incredibly important aspects of any project, especially those utilising a videogame scenario as their primary means of testing (Mohaddesi and Harteveld 2020). Covered here are the results and feedback from the first pilot test alongside experimentation with other aspects of the project

Pilot Test Results

On the 7th of March I conducted a small scale pilot test during a data collection day hosted at the Confetti Institute of Creative Technologies. The results from the pilot test left me with a great deal of data that I could utilise to view preliminary trends and form early opinions from. In addition to the results gathered, the testing also demonstrated clear areas for improvement in relation to both the 3D test scenario and the survey I was utilising. The results of the pilot test will be reviewed below. This will start with basic information about the 7 participants, followed by the results of the adapted Game Experience Questionnaire (GEQ) by IJsselsteijn, De Kort and Poels (2013) that I employed, then any insights from my notes and participant feedback, and finally a closing summary of the findings.

The results from the GEQ only covers 5 of the 7 components featured in the standard GEQ. This is because during the pilot test I utilised a version of the GEQ that combined some of the components into a broad negativity score (Johnson, Gardner, and Perry 2018). As such there will only be 5 components shown here as that is all that was measured.

The files used as part of the pilot test can be found here.

Pilot Test Results - Basic Participant Information

Participant Gender, Age, and Hours in Game Per Week

The collection of basic information about the participants plays little role in the collection of data from the Game Experience Questionnaire. However, its collection does still provide the opportunity to review any trends that may be present and demonstrates the average demographic of those who interacted with the testing. The gallery above features charts demonstrating the make up of the participants of the pilot test and shows their recent and overall experience with video games. Interestingly all participants felt they were experienced with games.

Pre-GEQ information

The information presented in the gallery above shows the split of which level participants had first, alongside their time taken to complete each level. The chance of having either level first is 50% so the close split makes sense. The time to complete the diegetic level was a lot longer than the non-diegetic. In my opinion, this is due to the fact that the non-diegetic puzzle is just quicker to complete, a key takeaway from testing covered further down on this page. However, the box and whisker graph does seem to show that the time taken to complete the diegetic level is far more diverse amongst participants in comparison to the non-diegetic level. This also seems to coincide with recent experience of participants, as the two participants that took far longer than the others to complete the level, whilst reporting they were experienced, reported having had very little time in games in the past week in comparison to other participants. This seems to support conclusions made by Iacovides and others (2015) regarding the role of expertise aiding in creating a sense of immersion, and by extension decreasing a better immersed participants completion time.

Covered now will be the average participant responses for each component of the GEQ (Game Experience Questionnaire) employed via a Jotform (Jotform Inc 2006) survey. Each component will be accompanied by a gallery with associated tables. A brief summary of my thoughts and any correlations will be given.

For reference, Level A is the level primarily incorporating diegetic signposting, and Level B is the level primarily incorporating non-diegetic signposting. Data can be viewed independently via the button to the left.

Competency Component Ratings

Participants’ average competency component ratings suggest that users felt more competent with the non-diegetic level. Whilst the difference in the two are relatively minor, there is a clear rise in the feeling of competency from 4 of the participants when interacting with the non-diegetic level. Participant 4 seems to have no bias for either level, and participants 6 & 7 have the opposite opinions of the majority which is reflective of their listed preference too. Time taken to complete the level seemed to play a significant role in how competent participants felt. Strangely however, competency did seem to minorly increase for both levels the longer participants took to complete them, which is the opposite effect of what I would have assumed.

Sensory and Imaginative Immersion Component Ratings

Similarly to the competency component, the sensory and imaginative immersion components averages are very similar. Despite this, there is a minor preference placed on the diegetic level over the non diegetic. This however seems to solely be down to participant 6’s clear preference for the diegetic level, as 4 of the 7 participants scored the same average on both levels. Sensory and imaginative immersion seems to rise with time taken to complete the level, which makes sense as participants have more time to immerse themselves in the game world. This trend is relatively similar for level A and B, with the trend being slightly more pronounced with the diegetic level A.

Flow Component Ratings

Participant flow rating averages were fairly scattered. It is interesting to me that flow ratings have deviated so much between participants in comparison to immersion. Some academics like Michailidis, Balaguer-Ballester and He (2018) argue that flow and immersion are very similar in nature. However, their differing results here may suggest the two are distinctly different. These differences are present in the time taken to complete correlation scatter graphs too. The trends for S & I immersion were far more pronounced in comparison to flow.

Positive Affect Ratings

The average rating by participants in relation to the positive affect component of the GEQ was fairly balanced. Most participants had fairly similar opinions on either level, with participant 1 showing clear bias towards the non-diegetic level in this component, and participant 6 the opposite. Time to complete seems to have coincided with a higher enjoyment of the level more in the diegetic level. This is most likely down to players having longer to access a state of immersion, as this trend line is very similar to the trendline seen in the sensory and imaginative immersion correlation chart for the same level.

Negativity

The average negativity rating tended to be higher for the non-diegetic level B amongst participants. I feel this is most probably down to the lack of complexity in the non-diegetic level in comparison to the diegetic level, as two participants have expressed similar thoughts in their feedback. One participant stated:

"I really enjoyed pushing around the boxes through the level (diegetic) and thought it was an interesting mechanic"

With another stating that they preferred level A by saying:

"It was more intellectually stimulating than flicking levers at semi-random"

The second statement also made it clear to me I needed to better signpost the objectives in the non-diegetic level. There seemed to be very little correlation between the time taken to finish the levels and the average negativity scores of participants.

Pilot Test Results - Level Preferences

Level preference amongst participants was fairly evenly split, with a slight preference towards Level B. This was surprising to me as Level A was slightly more polished than Level B. However judging by user responses it seems that participants found the clear indications and exploration present in Level B more preferable to that featured in Level A. This sentiment is further supported by the average competency ratings being higher in Level B in comparison to Level A.

Pilot Test Results - Technical errors and Participant Suggestions

Following their GEQ responses participants were asked if they encountered any errors and had any suggestions to be made. This highlighted some clear issues I had not encountered prior to the test. The primary things highlighted were issues with the simple diegetic puzzle and some doors, alongside suggestions regarding the strength, style and positioning of lighting. User responses can be seen in the raw data files from the pilot test.

Pilot Test Results - Personal Notes

During their interaction I also made some very rudimentary notes whilst observing participants during play. These notes generally cover the same things participants mention in their own feedback, but I thought their inclusion would provide some insight to my thoughts on the day. These notes are also available in the raw data files from the pilot test. One thing I made a mental note of during the test is the rate at which people clicked through the likert questions. As the GEQ features 33 separate questions related to each component it measures, it takes quite a lot of time to fill out. This seemed to lead to participants clicking through the questions quicker as it went on to just finish with the survey. In light of this I have considered using the in-game questionnaire featured as part of the GEQ. This measures all elements the core version does, but it does so in 12 questions as opposed to 33. Discussion on this change is featured further down in the challenges with the survey covered further down this page.

Pilot Test Results - Summary

The pilot test gave me a great deal of insight into how users would interact with and feel when taking part in the test. Furthermore, it provided me with invaluable primary research I used to both inform my decisions moving forward and for comparisons sake against my final results.

I'll firstly summarise the results of the survey I conducted. Ultimately, the results I got back got back were relatively in line with what I was expecting - there was little difference in the average component scores overall. Some studies I reviewed during the proposal highlighted the fact that there was little change between scenarios featuring exclusively diegetic and non-diegetic game elements - the best example of this sentiment being the study conducted by Iacovides and others (2015). Negativity did surprise me somewhat, however I feel its higher rating in the non-diegetic level is down to a lack of complexity in comparison to the diegetic level, which was a fault of my planning in the lead up to the pilot test.

The technical feedback I got back from this test was invaluable. The testing highlighted multiple glitches that needed to be ironed out before testing, such as the ingots colliding with their platforms when teleporting and being catapulted away from their intended positions. Participants' grievances and my own personal observations also demonstrated clear areas for more theoretical improvement. These include the addition of a pause menu, a reduction in mouse sensitivity, and most importantly for the project, increasing the complexity of the complex puzzle in the non-diegetic level to both increase participants' time in the level and their exposure to non-diegetic signposting in line with what is present in the diegetic level.

Challenges, Testing, and Experimentation

Covered here will be my experimentation during the project. This will primarily be focused on my work in the Unreal Engine (Epic Games 2022a), however my experimentation with different survey software will also be explored here as it played a major role in my decisions regarding the survey post pilot test.

Unreal Engine

Testing &

Challenges

1st February 2023 - Item Dropping Tweak

22nd February 2023 - Block Push Puzzle (Complex Diegetic Puzzle) Issues

29th February 2023 - Challenges with non-diegetic object highlighting

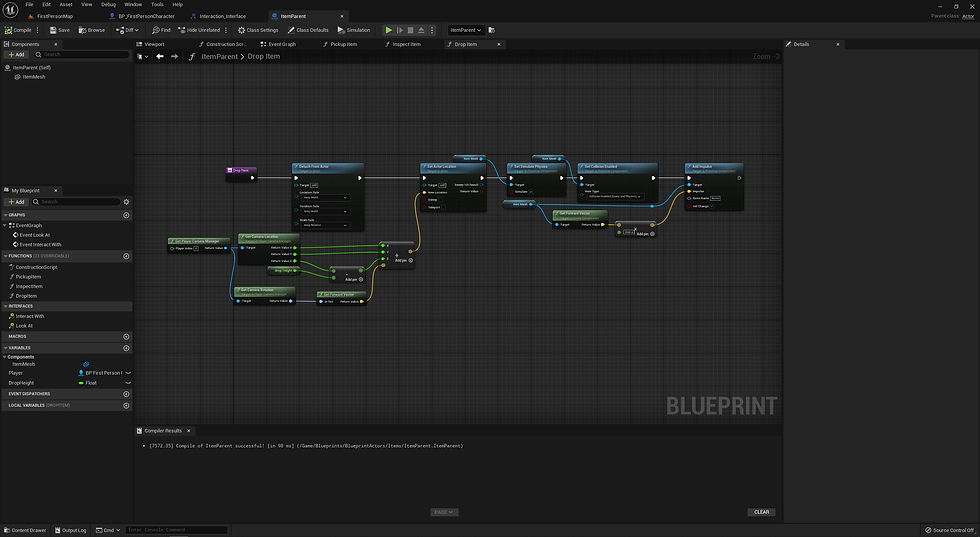

Show the blueprint nodes within the Item_Parent blueprint actor (All items in the scene are children of this blueprint actor)

Level BP in the dev level demonstrating how the event dispatcher is set and called

Demonstration of where in the character BP this is called. Event is called in the check look at function

Show the blueprint nodes within the Item_Parent blueprint actor (All items in the scene are children of this blueprint actor)

Covered here will be any issues and testing I have conducted in the Unreal Engine. This will be done in an informal blog style format as and when I encounter and issue

Having issues with items clipping into the world, this is down to the drop location of the held item on the playercharacter. Edited the dropping function slightly to drop items from below the player characters camera with a minor amount of force along its forward vector. Did this to ensure objects wouldn’t clip out of the world when dropped into things like they did with previous set up.

In simpler terms, I changed starting location of an item that the player dropped. With the new system the item is dropped from inside the character with some force added in the forward direction. By doing this, items are now dropped from the player as opposed to appearing in front of them, which would sometimes cause them to collide with other objects or walls in an undesired way.

During testing using Confetti’s computers I noticed the blocks were not moving the correct amount of distance when pushed - usually off by about 2.6 units/cm. Whilst not 100% the cause, Kris (peer) suggested that the lower frames per second (20 on these low-end machines as opposed to the 60+ on my home machine) might be the cause. The low frames per second leading to the timeline not allowing the change in location to be fully called before the timeline’s alpha reached 1. The fix for this issue was heavily inspired by a suggestion from Rhys (peer). The fix involved setting the end location prior to the set world location node being called, ensuring that once the alpha of the lerp reached 1 there was no way the correct distance couldn’t have been reached, making the mechanic frame independent.

In simpler terms, the speed the game ran at would affect how far the sliding diegetic puzzles block would move. On quick machines like my home computer this wasn't a problem, however on slower computers this was an issue that would eventually stop the game from working. To combat this, I implemented some script with the input of friends that made the mechanics movement independent of the speed the game ran at.

(This entry is more in the style of logs I made throughout the day, a summary is at the bottom)

Been trying to figure out how to implement a highlight around objects when they’re looked at. I used a simple tutorial online to get the highlight working and I’ve made it work with my interaction system. The issue I’m having is the highlight when looked at can’t be turned off, and my method of attempting to fix this so far is not sufficient as it only works for one class of actor and is not inherited by the actors’ children. Thinking of having some kind of array in the level blueprint that I can hook up to a custom event called whenever the player stops looking at an actor, this is a bit inefficient though and I’ve not implemented it yet, so I’ll see how it goes and report back.

-

Plan worked somewhat. Using an array in the level bp was good for what I wanted but I struggled to find a way in which I could consistently refer to it. Watched a tutorial about event dispatchers whilst googling methods of globally calling and event, these worked great for what I needed and were easily implemented to give the desired effect.

-

This isn’t perfect, I will have to make an array for every different class of actor I need to highlight. This is not too much of an issue for me however as there will be few actors implementing this highlight system.

In simpler terms, I could not get non-diegetic items to be un-highlighted when I looked away from them. The issue here is that I was unable to communicate between my character and the objects I was trying to un-highlight. My fix for this, whilst slightly inefficient, was to gather a list or array of all the objects in the scene that could be highlighted, and then tell them to be un-highlighted if the player was looking at something that could not be highlighted. Given the small scale of this game, this inefficiency was not an issue, but in larger productions this would definitely present an issue as there would be many highlightable objects that the level would have to communicate with.

Describe your image

Survey Challenges and Testing

Survey Hosting Challenges

Very early draft of the pilot survey in Forms where I found out you couldn't submit files

To properly give context to the challenges I faced during the creation and maintenance of the survey, I need to first give context to the decisions I made both before and after the pilot test. When I began making the survey for the pilot test I found that Microsoft Forms (Microsoft 2016) wouldn't allow users to upload files without a google account. As I initially believed a signed copy of the consent form had to be provided alongside a participant's submission I decided against using Forms. Instead I began utilising Jotform (Jotform Inc 2006) as I had utilised it previously and I knew it supported file submissions.

When I conducted the pilot test I found out two important bits of information. The first critical bit of information I discovered is that participants are able to give informed consent to testing through clear statements and responses in the survey, negating the need to upload the consent form. The second core bit of information from this pilot test is that Jotform exports likert scale questions to Excel extremely poorly, this is better visualised in the raw data from the pilot test. Both of these bits of info made me decide I should move back Microsoft Forms.

Development on the survey from this point went smoothly from here. Microsoft Forms (Microsoft 2016) is heavily integrated into Microsoft's other software packages through Microsoft 365, and the user interface to develop and publish surveys on Forms is very similar to the one on Jotform (Jotform Inc 2006), making the move and redevelopment fairly easy.

Game Experience Questionnaire (GEQ) Experimentation

My choices during this phase were split over the course of multiple weeks. As such, this will be done in a more informal format similarly to the experimentation above.

15th of March 2023

I discussed this slightly in the personal notes section of the pilot test. However, as the Game Experience Questionnaire plays a major role in my data collection I felt this needed a section in which I discussed my thinking.

Following his partaking in the pilot test, a friend of mine expressed his opinion that the amount of questions in the survey felt a bit oppressive/ overwhelming. This sentiment was further echoed during a supervisor meeting the day before the pilot test and in one of my Wednesday sessions in which we reviewed each others' surveys prior to the pilot test. The pilot test survey hosted on Jotform can be found here. This helps demonstrate how the survey looked during the pilot test.

In my mind following the this revelation I can do 1 of 2 things. I can either:

-

Utilise the in-game version of the GEQ. This version of the GEQ is described as being "a concise version of the core questionnaire" (IJsselsteijn, De Kort and Poels 2013), and only utilises 2 questions per component as opposed to between 5-6. Whilst this solves my issue it may also degrade the quality of the results gathered.

-

My second option is to adapt the GEQ myself and utilise 3-4 questions per component. This reduces the overall size of the questionnaire whilst retaining some of its complexity. This would also give me an opportunity to remove questions that may not relate to my game, like those regarding thoughts about the not present narrative. However similarly to option one this may degrade the results I gather, and it is untested unlike the in-game GEQ.

In both of these options presented I will be scrapping utilising the 5 component system of the GEQ adapted by Johnson, Gardner, and Perry (2018). This system when tested does not seem fit for purpose, scores for the combined components were wildly different. In my mind, combining challenge, which seems fairly neutral in its presentation, with negative affect, which is obviously to do with a participants negative feelings, into one criteria is counter intuitive and degrades to overall results.

26th of April 2023

Having spent a lot of time fixing issues with the scenario and doing written work over the rest of March and April, I have finally come to a decision on how to proceed with the survey. Whilst this was by no means an easy choice, I have decided to go with the former of the two options posed last month. Whilst a self made 3-4 question GEQ (Ijsselsteijn, De Kort and Poels 2013) would likely provide data with better fidelity, there is an inherent issue that I feel offsets or even completely negates this benefit. If I proceed by only reducing the questions for each of the components by 1-2, the Likert sections of the questionnaire will feature approximately 28 statements for each level. This for me is still too long; participant engagement in the survey is a necessity for the collection of accurate quantitative data, and I do not wish to contribute to phenomena like survey fatigue that will further negatively affect this study and potential others being conducted (Van Mol 2017). Owing to this, the fact that the in-game version of GEQ has been utilised before by others, and because it is touted by Ijsselsteijn, De Kort and Poels (2013) as being "a concise version of the core questionnaire", my final decision is to utilise the in-game version of the GEQ as part of the main testing.